Show me your brain scan and I'll tell you how old you really are

Artificial neural networks will play an increasingly important role in medical diagnosis

The biological age of a person can be accurately determined from brain images using the latest AI technology, so-called artificial neural networks. Until now, however, it was unclear which features these networks used to infer age. Researchers at the Max Planck Institute for Human Cognitive and Brain Sciences have now developed an algorithm that reveals: Age estimation goes back to a whole range of features in the brain, providing general information about a person's state of health. The algorithm could thus help to detect tumours or Alzheimer's disease more quickly and allows conclusions to be drawn about the neurological consequences of diseases such as diabetes.

Symbolic image

Unsplash

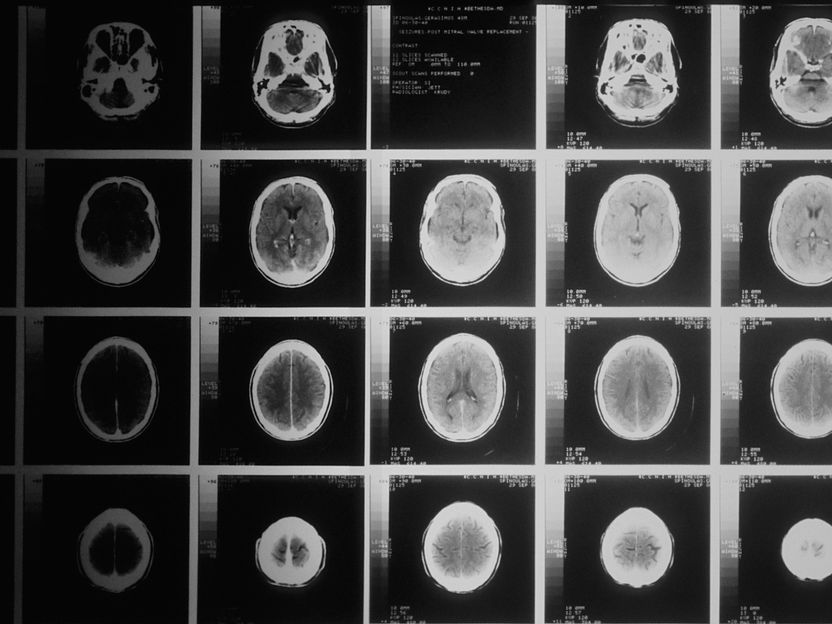

Deep neural networks are an AI technology that is already enriching our everyday lives on many levels: The artificial networks, which are modelled on the way real neurons work, can understand and translate language, interpret texts and recognize objects and people in images. But they can also determine a person's age based on an MRI scan of their brain. It is true that it would be easier to find out the age by asking the person. However, machine age determination also gives you an idea of what a healthy brain normally looks like at different stages of life. If the network estimates the biological age of the brain based on the scan to be higher than it actually is, that can indicate possible disease or injury. Previous studies, for example, had found that the brains of people with certain diseases, such as diabetes or severe cognitive impairment, appeared to have more years under their belts than was actually the case. In other words, the brains were in a biologically worse state than one would assume based on the age of these people.

Although artificial neural networks can accurately determine biological age, until now it was not known what information from the brain images their algorithms used to do so. Scientists from the field of AI research also refer to this as the "black box problem": According to this, you push a brain image into the model, the "black box," let it process it - and ultimately only get its answer. However, due to the complexity of the networks, it was previously unclear how this response is generated. Scientists at the Max Planck Institute for Human Cognitive and Brain Sciences (MPI CBS) in Leipzig therefore wanted to open the black box: What does the model look at to arrive at its result, the brain age? To do this, they worked with the Fraunhofer Institute for Telecommunications in Berlin to develop a new interpretation algorithm that can be used to analyse the age estimates of the networks.

"This is the first time we have applied the interpretation algorithm in a complex regression task," explains Simon M. Hofmann, a PhD candidate at MPI CBS and first author of the underlying study, which has now appeared in the journal NeuroImage. "We can now determine exactly which regions and features of the brain are indicative of a higher or lower biological age."

This showed that the artificial neural networks use, among other things, the white matter to make predictions. Accordingly, they look in particular at how many small cracks and scarring run through the nerve tissue in the brain. They also analyse how wide the furrows are in the cerebral cortex or how large the cavities, the so-called ventricles, are. Previous studies have shown that the older a person is, the larger his or her furrows and ventricles are on average. The interesting thing is that the artificial neural networks arrived at these results on their own - without having been given this information. During their training phase, all they had available were the brain scans and the person's true years of life.

"Of course, an increased age estimate can also be interpreted as an error of the model," said Veronica Witte, the leader of the research group. "But we were able to show that these deviations are biologically significant." For example, the researchers confirmed that people with diabetes have increased brain age. They were able to show that sufferers have more lesions in the white matter.

It's already clear, artificial neural networks will play an increasingly important role in medical diagnosis. Knowing what these algorithms are guided by will thus become increasingly important: In the future, a brain scan could be automatically analysed by different networks, each of which specializes in certain areas - one draws conclusions about Alzheimer's disease, another about tumours, and yet another about possible mental disorders. "The physician then not only receives feedback that certain diseases may be present. She also sees which areas in the brain underlie the diagnoses," Hofmann explains. The corresponding features are marked directly in the MRI image by the algorithms in each case and can thus be detected more easily by the medical professionals - who in turn can then draw immediate conclusions about how severe a disease is. It would also be easier to detect misdiagnoses: If the analysis is based on biologically implausible areas, such as errors that occurred when the image was created, these can be immediately detected by the physician. The research team's interpretation algorithm can thus ultimately also help improve the accuracy of the artificial neural networks themselves.

In a follow-up study, the researchers now want to investigate in more detail why their models also look at features in the brain that have so far played little role in aging research. It had turned out, for example, that the neural networks also focus on the cerebellum. How aging processes progress there in healthy and diseased people has been a mystery to scientists until now.

Original publication

Simon M Hofmann, Frauke Beyer, Sebastian Lapuschkin, Ole Goltermann, Markus Loeffler, Klaus-Robert Müller, Arno Villringer, Wojciech Samek, A Veronica Witte. Towards the interpretability of deep learning models for multi-modal neuroimaging: Finding structural changes of the ageing brain. Neuroimage. 2022 Nov 1;261:119504