How brain areas communicate shapes human communication

The hearing regions in your brain form new alliances as you try to listen at the cocktail party

The ability to listen to one person while ignoring distracting sounds or voices ever-present in daily-life situations depends on how well the communication between distinct brain regions is tuned for attentive listening. A team of a biomedical engineer, a linguist, and a psychologist at the University of Lübeck have now shown how listening success in such situations comes with a fine-tuned reconfiguration of brain networks.

Imagine yourself in a busy restaurant. You try to listen to your acquaintance on the other side of the table, while being distracted by other sounds and voices around you. We all hear with our ears, obviously, but the ability to focus and maintain attention to a particular talker involves widely distributed regions in the human brain. Moreover, these listening abilities vary from one individual to another. Researchers from the University of Lübeck have now discovered that the flexibility with which an individual reconfigures the communication between distinct brain areas determines how well they can adapt to such a difficult listening situation.

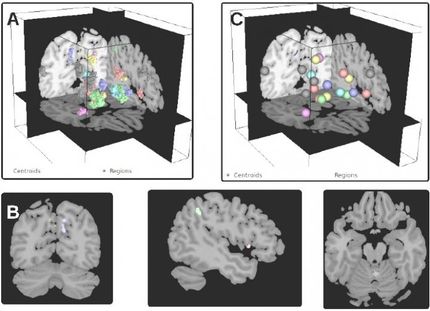

Communication in the brain is organized into different networks. These brain networks in fact closely resemble many other forms of networks we might be more familiar with: A web of flight connections between airports, or a network of friends on facebook. What is common to all these network systems is that they are comprised of many nodes and many connections between these nodes. They share universal properties, among which a particularly important one is network “modularity”. Modularity describes how strongly individual network nodes are grouped into interconnected modules that allow the coordinated and goal-directed flow of information in the brain.

Scientists from the Auditory Cognition research group at the University of Lübeck have investigated whether the way in which communication between brain areas is organized plays a role for our listening success.

“We reasoned that to adapt to a difficult listening situation, the configuration of these brain networks would need to adapt as well”, explains co-study leader Mohsen Alavash. “We particularly focused on the degree to which the larger brain networks organized into smaller sub-networks or modules. We reasoned that this configuration should change during listening, when compared to a measurement during rest.”

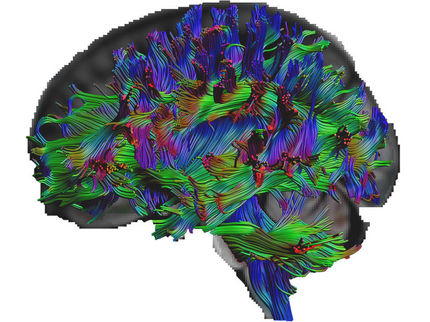

To this end, the researchers used magnetic resonance imaging (MRI) to record brain activity while participants were passively resting inside the scanner and while they had to pay close attention to one of two concurrent talkers presented via headphones. As expected, some individuals could more accurately report on the speech of the to-be-attended talker than others.

Could these differences in the ability to focus on one talker while ignoring another be explained by differences in brain-network communication mode? “We observed a change in the configuration of brain network modules when participants had to switch from passively resting to our challenging listening task”, explains co-study leader Sarah Tune. “Individuals for which we observed a stronger reconfiguration in the makeup of brain modules–which we interpret as a better adaptation to the task–indeed showed better listening abilities”, adds Jonas Obleser, leader of the research group Auditory Cognition. The researchers found that the network orchestration during listening involved brain areas associated with processing of sounds and orienting of attention.

The researchers hope that the better understanding of these brain re-configurations that come with inter-individual differences in listening success will advance future attempts in the rehabilitation of speech comprehension and in the development of hearing aids.

Original publication

Other news from the department science

Get the life science industry in your inbox

By submitting this form you agree that LUMITOS AG will send you the newsletter(s) selected above by email. Your data will not be passed on to third parties. Your data will be stored and processed in accordance with our data protection regulations. LUMITOS may contact you by email for the purpose of advertising or market and opinion surveys. You can revoke your consent at any time without giving reasons to LUMITOS AG, Ernst-Augustin-Str. 2, 12489 Berlin, Germany or by e-mail at revoke@lumitos.com with effect for the future. In addition, each email contains a link to unsubscribe from the corresponding newsletter.